Discreet Log #5: Improving Safety with Cwtch UI Tests

16 Apr 2021

In my last post, I discussed porting Cwtch’s user interface from Qt to Flutter (using our common Go backend).

This week, I’m going to give an introduction of our use of flutter testing tools to improve the quality and safety of the new Cwtch UI.

We will take a look at Flutter widget and integration tests, and how we are using them to test everything from our custom textfields to safety critical features like “does blocking actually work?”.

Flutter test

The Flutter test package provides most of what you need to write and automate tests.

For example, here are some basic tests written to test input handling and validation for our textfield widget, configured to act as a required integer in a form:

// Inflate the widget to get its initial appearance

await tester.pumpWidget(testHarness);

// Make sure it looks like the screenshot on file

await expectLater(find.byWidget(testHarness), matchesGoldenFile(file('form_init')));

// Make sure the placeholder text is correct

expect(find.text(strLabel1), findsOneWidget);

// "42" valid integer test

// focus on the text field

await tester.tap(find.byWidget(testWidget));

// advance a frame, type, then advance more frames

await tester.pump();

await tester.enterText(find.byWidget(testWidget), "42");

await tester.pumpAndSettle();

// make sure the input text is rendering as expected

await expectLater(find.byWidget(testHarness), matchesGoldenFile(file('form_42')));

expect(find.text("42"), findsOneWidget);

// make sure no errors are being displayed

expect(find.text(strFail1), findsNothing);

expect(find.text(strFail2), findsNothing);

// reset state for next test

ctrlr1.clear();

await tester.pumpAndSettle();

// "alpha quadrant" (test rejection for being non-integer)

await tester.tap(find.byWidget(testWidget));

await tester.pump();

await tester.enterText(find.byWidget(testWidget), "alpha quadrant");

await tester.pumpAndSettle();

await expectLater(find.byWidget(testHarness), matchesGoldenFile(file('form_alpha')));

// text is rendered correctly...

expect(find.text("alpha quadrant"), findsOneWidget);

expect(find.text(strFail1), findsNothing);

// ...and so is the appropriate error message

expect(find.text(strFail2), findsOneWidget);

ctrlr1.clear();

await tester.pumpAndSettle();

// "" empty string rejection test

// ctrlr1.clear() doesn't trigger validate like keypress does so:

formKey.currentState.validate();

await tester.pumpAndSettle();

await expectLater(find.byWidget(testHarness), matchesGoldenFile(file('form_final')));

// this time we want a different error message

expect(find.text(strFail1), findsOneWidget);

expect(find.text(strFail2), findsNothing);

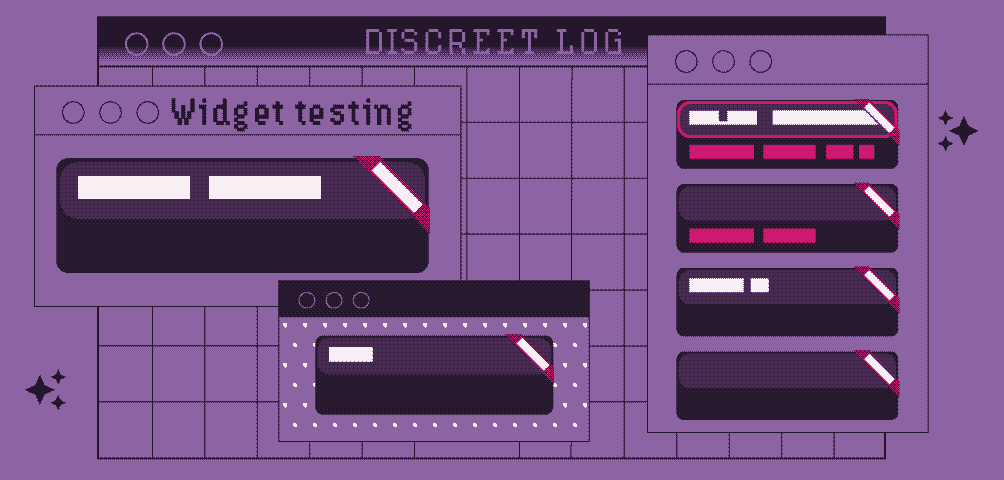

The matchesGoldenFile function will automatically create the reference images for you (flutter test --update goldens) which is very convenient:

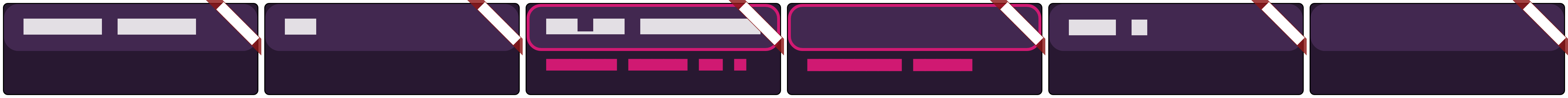

If a regression/bug later causes the widget to render differently, the difference is detected visually, and Flutter test is even kind enough to calculate some image diffs for us, such as in this case where the input-validation-error colour has been “accidentally” set to green:

Integration testing

Okay, so we’ve verified that textfields are fields that can hold text… are you on the edge of your seat yet? Fortunately once all the widget testing and unit testing is over, we can also run UI-based functionality (integration) tests of Cwtch with this same package to test more interesting and important behaviours.

For example, consider a critical bug occurring in safety/anti-harassment features like the “block” function. An error here has the potential for damaging consequences, so we would like to take extra steps to make sure that, for example, work on another feature doesn’t accidentally cause the block function to stop working.

When testing blocks, it’s critical that not only does a toggle state on a settings pane somewhere get changed/saved, but new messages from blocked contacts should never appear.

Fortunately, it’s relatively easy to check that something is not appearing or changing, using the same image diff technique from above. We can test both that receiving messages works, and not receiving messages from blocked contacts works, using this sort of test:

// start the app and render a few frames

app.main();

await tester.pump();

// log in to a profile with a blocked contact

await tester.tap(find.text(testerProfile));

await tester.pump();

expect(find.byIcon(Icons.block), findsOneWidget);

// use the debug control to inject a message from the contact

await tester.tap(find.byIcon(Icons.bug_report));

await tester.pump();

// screenshot test

await expectLater(find.byKey(Key('app')), matchesGoldenFile('blockedcontact.png'));

// any active message badges?

expect(find.text('1'), findsNothing);

If the message from the blocked contact causes any unexpected visual change in the interface on its arrival, this test will catch it and alert us before the bug can make its way onto our users’ devices.

For example, suppose we accidentally showed a “messages received” counter-badge on the portrait for a blocked contact during a test; it would be caught like so:

I’m a big fan of testing this way. While writing these tests into the pipeline, it’s easy to see how they could be derived from user stories and used to support them in the future.

Of course, the test cases themselves have to be initially created and reviewed and occasionally updated by human eyes, but tests like these provide at least an extra level of confidence that things still work the way we expect them to. These test also work in tandem with our library automated integration tests to fully exercise the relevant code (we will be talking more about that in an upcoming Discreet Log).

Code coverage

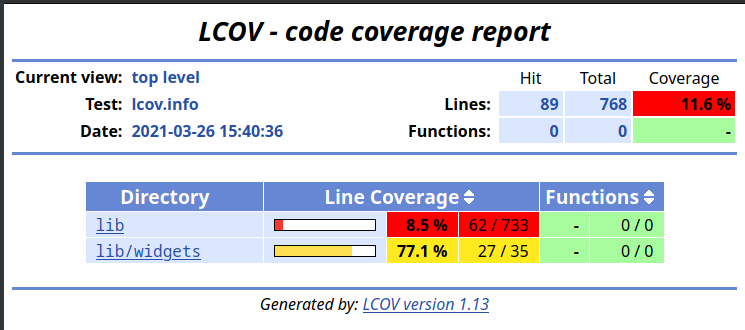

When running tests, it’s trivial to add the --coverage argument to have code coverage information calculated for you at the same time.

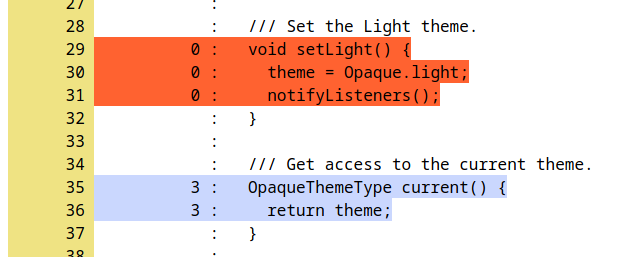

This generates a file named lcov.info which can be fed into lcov or any other compatible visualization tool, resulting in a report like so:

…which can be further drilled into, like so:

Automation

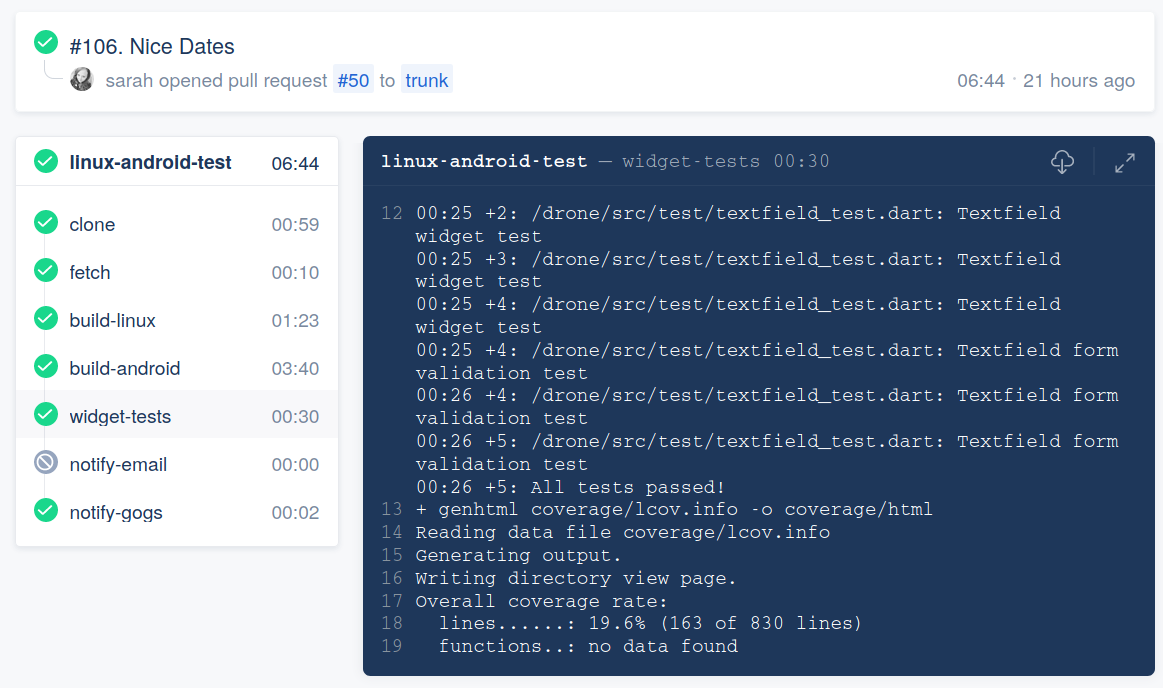

Thanks to Dan’s work on our build automation, we can have Drone run all these tests for us automatically and update PRs with the result:

Conclusion

With so many great tools at our disposal to help make Cwtch a pleasant and secure experience, I’m excited to see the final product and finally put Cwtch into Beta and start getting it further out into the world! Quality work takes time and resources; if you’d like to help us with Cwtch, please consider donating!